The Basics of Sound Synthesis

Article Content

Sound synthesis has been around for well over a hundred years. “The Telharmonium (also known as the Dynamophone) […] was developed by Thaddeus Cahill circa 1896.” (source). The basic premise was additive synthesis, and the device used tonewheels, as did the Hammond organ. These electromagnetic and electromechanical strategies provided the basis for the proliferation of innovative electronic instruments and designs in the second half of the 20th Century.

In 1928, Maurice Martenot invented the Ondes Martenot which was played by with a metal ring worn on the right index finger. The ring was slid along a wire to produce pitched electronic tones. Later versions had a non-functioning keyboard which gave the player a visual indication of pitch based on their position on the wire. In the later versions a functioning keyboard was introduced along with other features which were to become standard in modern synthesizers such as touch sensitivity, vibrato and the use of multiple waveforms. This instrument was used in several landmark compositions, most notably in Olivier Messiaen’s Turangalîla-Symphonie. It’s an absolute masterpiece you should hear performed live if you ever get a chance.

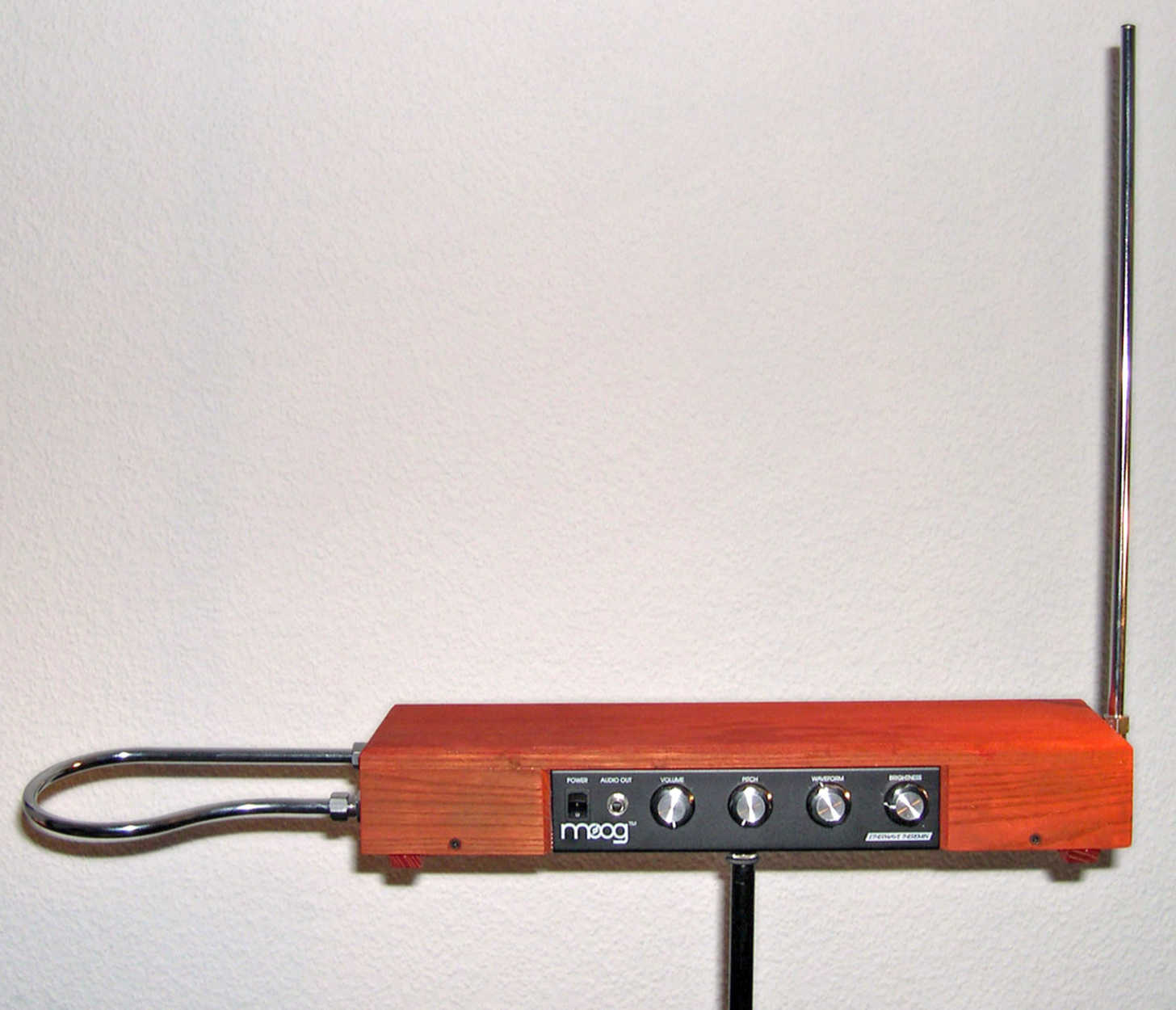

Probably the most recognizable electronic sound of the 20th Century is the Theremin, invented by Leo Theremin also in 1928. The spooky electronic vibrato sound became an iconic fixture in horror and suspense film scores and is still a favorite among contemporary composers.

What remains magical about this instrument is that it does not require physical contact to be played. Instead, the performer uses one hand to control the frequency and the other to control amplitude via proximity to two antennas. In the hands of virtuosic performers such as Lydia Kavina, the Theremin is capable of expressive and precisely pitched string-like lines. Robert Moog was known to be fascinated by the Theremin and built his own at the age of 14 from plans in Electronics World magazine.

The modern-day Vocoder owes its existence to early developments and research at Bell Labs, where Homer Dudley invented the technology. Initially intended to reduce the bandwidth of a signal so it could travel long distances, the robotic sound was popularized by Kraftwerk, the iconic German band that were pioneers of early electronic music.

This article will provide an overview of current synthesis models now in use with several links along the way to articles that take a deeper dive into each method. It will include a list and description of common parameters, control methods and a discussion of hardware versus software. But since we are talking about electricity and sound, let’s start with signal flow.

Typical Sections and Signal Flow

User Input

In all cases, there is some sort of user input that initiates the signal in a system. Even in the extreme case of algorithmic or AI based models, someone needs to program the underlying architecture and hit the start button. But as we are talking about instruments, consider the various ways a musician can instigate a sound.

There are myriad controllers available that can be connected to synthesizer modules or a computer. Typically MIDI (although not always), they include: keyboards, MIDI guitars, breath controllers, knob and slider devices, iOS apps and all sorts of alternative controllers.

Check the articles below for more about controllers.

- “The Complete Guide to Choosing a MIDI Controller”

- “8 Unusual MIDI Controllers for Music Production”

Sound Generation

Following some sort of user input is the sound generation section. The nature of this section is dependent on the method of synthesis being used and could be comprised of oscillators, noise generators, recorded samples, wavetables, sound-generating algorithms or any combination.

Filtering

After some sound is generated it is often sent to a filtering section where frequencies can be sculpted using the full complement of filter types, although the low-pass filter is the most commonly used.

Amplification

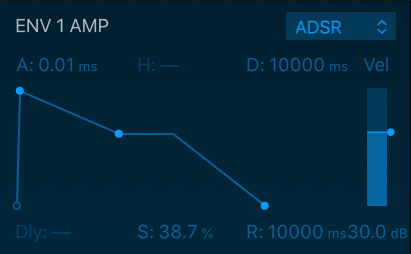

The last of the three major sections in the signal flow is where the signal gets amplified and shaped before being sent to the output. This section will typically include an envelope that is triggered by the input of the user and will have generally four basic stages: attack, decay, sustain and release (ADSR) — more on this later.

Modulation

This section is not inserted in the signal flow per se. Instead, it includes data-generating tools to alter the parameters in other sections and sometimes even parameters of the modulators themselves. These modulators can include LFOs (low frequency oscillators), envelopes, step sequencers, etc.

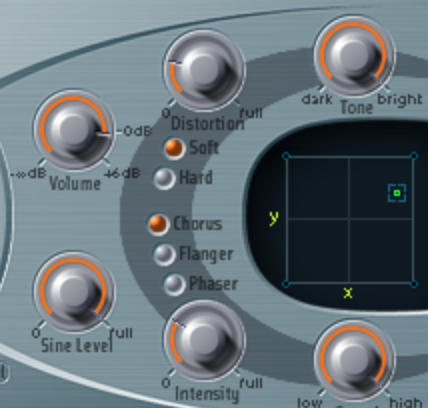

Effects Section

Modern synthesizers (especially soft synths) have onboard effects sections that can include distortion, saturation, chorus, flange, reverb, delay, panning and EQ. These are usually inserted before or after filtering depending on the device. More elaborate synths often have send/return capability and more sophisticated routing possibilities.

Not all synths have all these sections and some have sections unique to the method being used (e.g. granular or component modeling synths). But if you can get a firm grasp of the basic signal flow outlined above, you will be much better prepared to understand just about any synth you might come across.

The Methods

Additive Synthesis

As mentioned, additive synthesis is one of the oldest types out there. One challenge for using this method is that you need many more oscillators to produce rich timbres when compared to other methods. This was a particular problem when computing was in its nascency. As described by Curtis Roads, “additive synthesis is a class of sound synthesis techniques based on the summation of elementary waveforms to create a more complex waveform.” (Roads, 2012, p. 134)

For more on this method check out: “The Basics of Additive Synthesis”

Subtractive Synthesis

This method is generally comprised of the main sections mentioned above and uses the filtering section particularly to generate rich timbres and variety. It requires far fewer resources than an additive model — only a rich sound source like a sawtooth wave or noise generator and a robust filtering section.

More on subtractive synthesis: “The Fundamentals of Subtractive Synthesis”

FM Synthesis

Frequency modulation synthesis is based on the idea of using one oscillator (the modulator) to modulate another oscillator (the carrier). From this essential idea, an incredible amount of timbral variety can be produced. The introduction of the revolutionary DX7 by Yamaha in the early 80s blew the lid off the possibilities of digital synthesis given the limitations of computing power at the time.

More on FM synthesis here: “Introduction to FM Synthesis”

AM Synthesis

Amplitude modulation (AM) is more of an effect than a primary means of synthesis. It also uses modulator and carrier oscillators to produce an effect known as ring modulation where the amplitudes of a waveform periodically dip to zero causing additional frequencies to be created. By changing the depth and DC offset of an amplitude modulated signal, AM can produce a variety of tremolo based sounds at low modulator frequencies and audible sideband frequencies and higher modulation rates.

More in this article: “The Fundamentals of AM Synthesis“

Phase Distortion Synthesis

This method was used by Casio in the CZ-101 and some other models introduced back in the early 80s. It holds a special place in my heart as my first synth. It uses an interesting and unique idea that involves scanning “through a basic sine wave lookup table at an increasing and then decreasing speed while keeping the overall frequency constant as it relates to the pitch or note”.

More on this method here: “The Fundamentals of Phase Distortion Synthesis”

Vector Synthesis

This method uses the idea of mixing the output of two or more sound sources. These are typically oscillators although there are several sampling instruments that use a similar approach: blending combinations of sampled sounds using some sort of XY pad interface. In its simplest form, you can consider combining more than two oscillators a form of vector synthesis, but typically there are more, and often oscillators can be detuned in relation to each other. Many subtractive synth models use a vector approach to instigate a rich sound source as input for the filtering section.

Wavetable Synthesis

Wavetable synthesis involves reading through a lookup table which can include anywhere from a single cycle waveform to dozens or hundreds. The process of reading through these forms and morphing between shapes can be modulated and controlled by the user. “The interpolation between wave shapes is what creates the characteristic sound of a wavetable synth.”

More here: “The Basics of Wavetable Synthesis”

Component or Physical Modeling

This method can be computationally high compared to other models and includes using complex algorithms. Often the metaphor of a vibrating string is used to create unusual and often unworldly timbres that are perfect for sound design applications. I am also a fan of the percussive mallet-like sounds possible with this method that seem to lie somewhere between the acoustic and electronic worlds.

More on the subject here: “The Fundamentals of Physical Modeling Synthesis”

Granular Synthesis

This is another method that was once considered impractical in terms of real time applications due to computer requirements. Those days are long gone, and real time granular synthesis is now completely possible on any computer, tablet or smartphone. The essential idea is to take a recorded sample and chop it up into small pieces or grains, anywhere from 1 to 100ms in duration. These grains can then be operated on independently by pitch shifting, reversing, reordering and other methods.

More about this here: “Overview of Granular Synthesis”

Sampling

Although not strictly speaking a form of synthesis, samplers need to be included here because many of the techniques found in synthesizers can also be found in samplers including filtering, effects processing, modulation methods and vector style morphing as mentioned earlier. Pure samplers use recorded sounds as the main sound source(s). There can be hundreds of samples in one instrument, as is often the case with so-called deep-sampled instruments. An example is an acoustic sample library like a piano, where every key might be sampled independently at a variety of dynamic levels or with different pedal techniques. This painstaking process is why some orchestral libraries are so expensive.

More on samplers here: “The Fundamentals of Sampling Instruments and Libraries”

Hybrid Instruments

Several of the methods discussed above might be found within one single device as more and more hybrid instruments continue to emerge. Sometimes being a jack of all trades is indeed possible in the synthesis world. But specialization has its benefits, so if you want a great granular synthesizer, for instance, look for something billed as such.

Common Modulation Methods

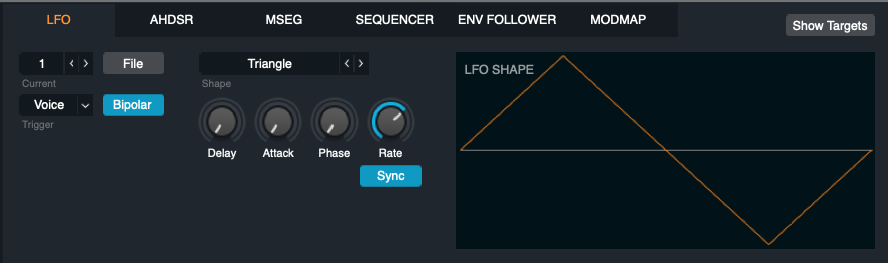

The Humble LFO

I would say that about 90% of my beginning students can identify an LFO as a low frequency oscillator. But only about 25% can tell me what that means or how it’s used. These simple oscillators churn out periodic data based on a chosen waveform shape at rates typically well below the audio rate of 20 Hz. They can be extremely slow like 0.1 Hz or slower. They can be used to modulate any parameter in the device as long as the instrument allows the signal path.

More here: “5 Essential LFO Parameters You Should Know”

Envelopes

The typical ADSR (attack, decay, sustain and release) envelope that most people can identify is usually attached or hardwired to amplitude. But envelopes can be used to modulate any parameter that LFOs can. The difference is that envelopes are often tied to user input. For example, as a MIDI note-on event is triggered, an envelope might also be triggered that is tied to the cutoff frequency of a low-pass filter. Think of envelopes as a table of data that is released over a user-defined amount of time. This is often triggered by the pressing and releasing of a MIDI key.

More on this subject here: “The Basics of Synth Envelope Parameters, Functions and Uses”

Step Sequencers

Step sequencers send out user specified data for every step which can be used to modulate synth parameters. These devices are usually synced to the tempo of the session if they are in a DAW, or they can be synced internally or externally by means of a clock or sync signal. While it is true that drum machines are clearly step sequencers, do not limit yourself by only thinking in these terms. These devices are superpowerful modulation sources that should be explored in depth.

Here’s an article to get you started: “The Basics of Step Sequencing (+ 9 Great Step Sequencers)”

Other Modulation Sources

There are many unique and fantastic modulation ideas to be found in the wide array of synths on the market. Developers have used all sorts of creative approaches that defy categorization.

Here is an article that explores some of these approaches: “A Guide to Synth Modulation Sources and Controls”

Less Understood Parameters

There are some synth parameters that seem harder to wrap your head around than others such as key follow, octaves expressed in feet and others. Even the difference between legato and mono settings can elude newcomers.

Read this article for a look at some less talked about parameters: “18 Synth Parameters That Are Often Misunderstood”

Check out my other articles, reviews and interviews

Follow me on Twitter / Instagram / YouTube

References

Roads, Curtis. The Computer Music Tutorial. MIT Press, 2012.