What is Bit Depth?

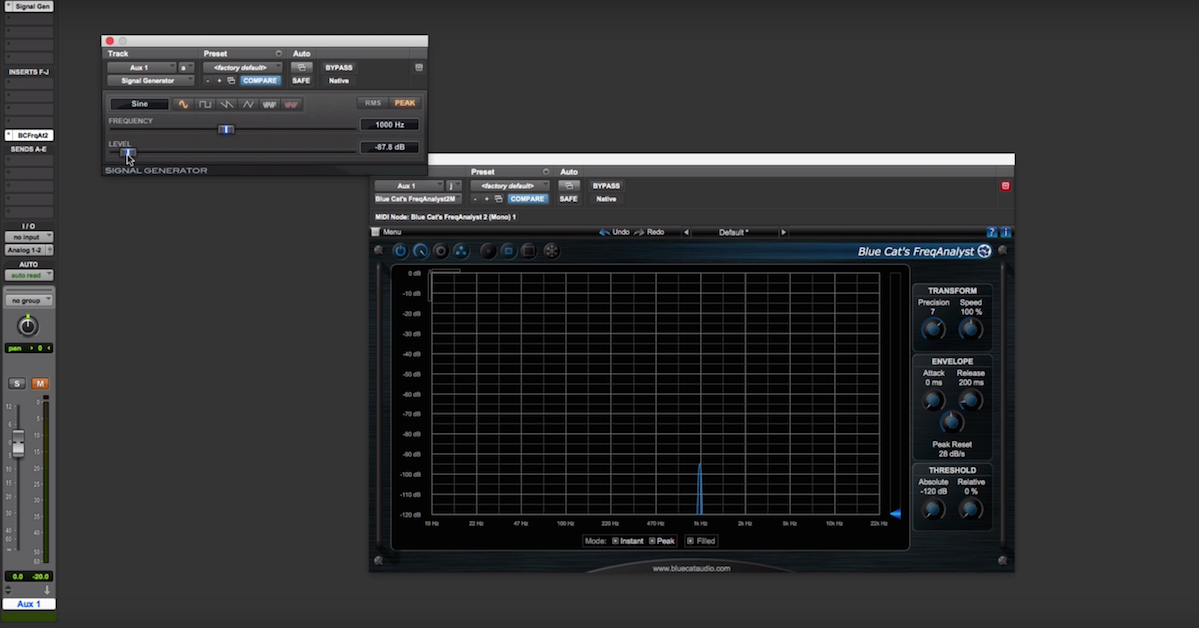

The basic concept you’ll need to understand is the relationship between the number of bits and the dynamic range of a recording.

The term, “Dynamic range,” mean the difference between the loudest signal you can record without distorting, and the quietest signal you can record before the audio falls beneath the noise floor. The number of bits corresponds directly to the dynamic range available in your recording.

Let’s listen to a few examples made at different bit depths. Pay special attention to the change in the amount of audible noise as we go through each. You’ll notice that the fewer the bits, the more noise you get.

First, a recording made with 16 bits per sample.

[mix, 16-bit]

Now a recording made with 8 bits.

[mix, 8-bit]

And now, using only 4 bits.

[mix, 4-bit]

At a resolution of 4 bits, the noise actually covers up some of the music in the quiet sections. It’s generally recommended that you use at least 24 bits in your recordings.

Now, most music distribution formats is 16 bits or fewer, and that’s fine for your audience, but when you’re working as a music producer, sometimes you’ll want to do things to a track like turn it up, turn it down, use a limiter, compress it, which might bring the noise floor up, so it’s nice to have a little extra dynamic range so that in the final product, we haven’t raised the noise floor to the point where we can hear it.