6 Things to Know About Sample Rate and Bit Depth

Article Content

In digital audio, sampling rate and bit depth can be a topic of quandary and debate. And with this topic, it can sometimes be difficult to find the facts in a swirling cyclone of opinions, although it is fact that most opinions will remain unchanged. Whether you adopt these facts or not is ultimately up to you. Here are a few things you may not have known about sampling rate and bit depth.

Let’s kick this off by revisiting the basics. We start with an audio wave, a pressure wave with infinite resolution in the time and frequency domain. A wave in which no electronic system — analog or digital — can fully and losslessly capture and reproduce. That being said, the limitations of a digital system is that we only have 1’s and 0’s to represent the audio we are hearing. So we might as well make the best use of those two digits, right?

First, we need to convert the analog representation of our audio wave into the digital realm. For this, we have the analog to digital converter or ADC. Before the ADC, our buttery smooth, and infinitely resolute analog signal must pass through a low pass filter known as an anti-aliasing filter. In short, it’ll prevent frequencies (higher than the ones we’re trying to sample) from interfering with the sampling process. The qualities of these filters play some of the biggest roles in being able to capture an analog sound, and is one of the key factors in what determines a converter’s “sound.”

1. Sampling Rate

Sampling rate is best thought of as a container for frequency information.

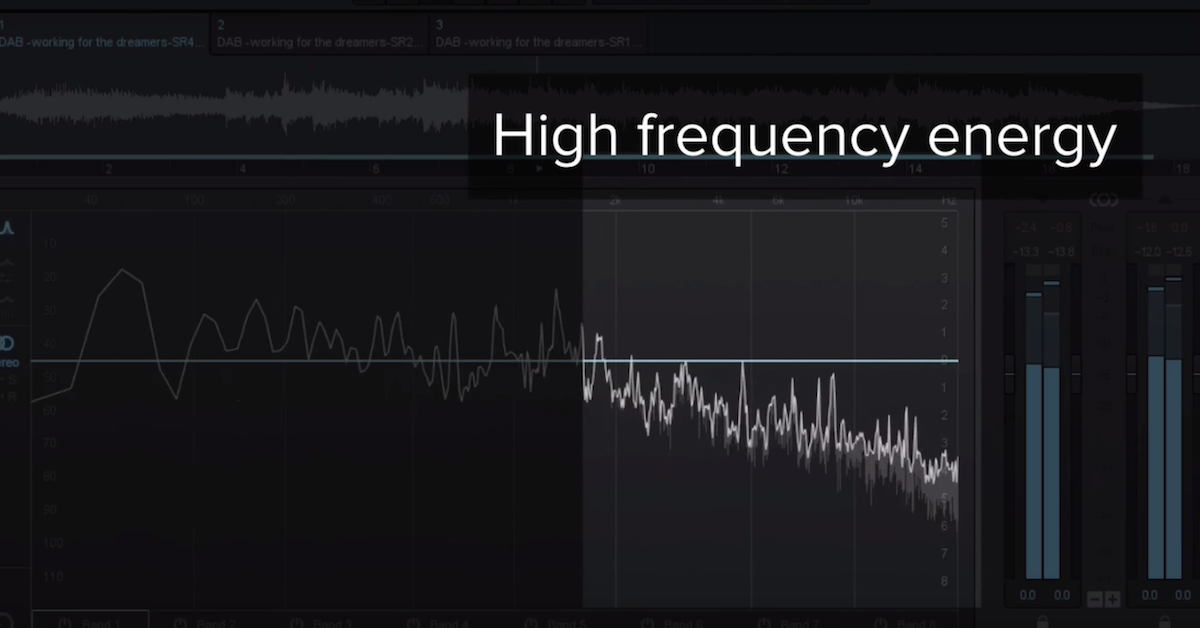

It’s the horizontal resolution representing the time domain. It’s arguably not as important as you may think, but does indeed have an effect on the audio you’re trying to reproduce and represent. It should, therefore, still be taken into consideration depending on the delicacy of the recording you are making. Higher frequencies, even those outside our hearing range, do affect the tones that are within that range.

Remember, audio is a pressure wave, so even slight changes in pressure, may induce a butterfly effect with your audio. When we work at higher sampling rates we’re able to capture those inaudible frequencies. These frequencies are going to be the harmonics of the tones that we can hear. But working at higher sample rates also allows us to process them along with the frequencies that we can hear.

So just because we can’t hear those frequencies, doesn’t mean we can’t feel them or that they don’t have an impact on our sound. If we don’t capture frequencies above those in our human hearing, we’d end up with a bunch of high frequency sine waves, without character or subtlety in the treble range.

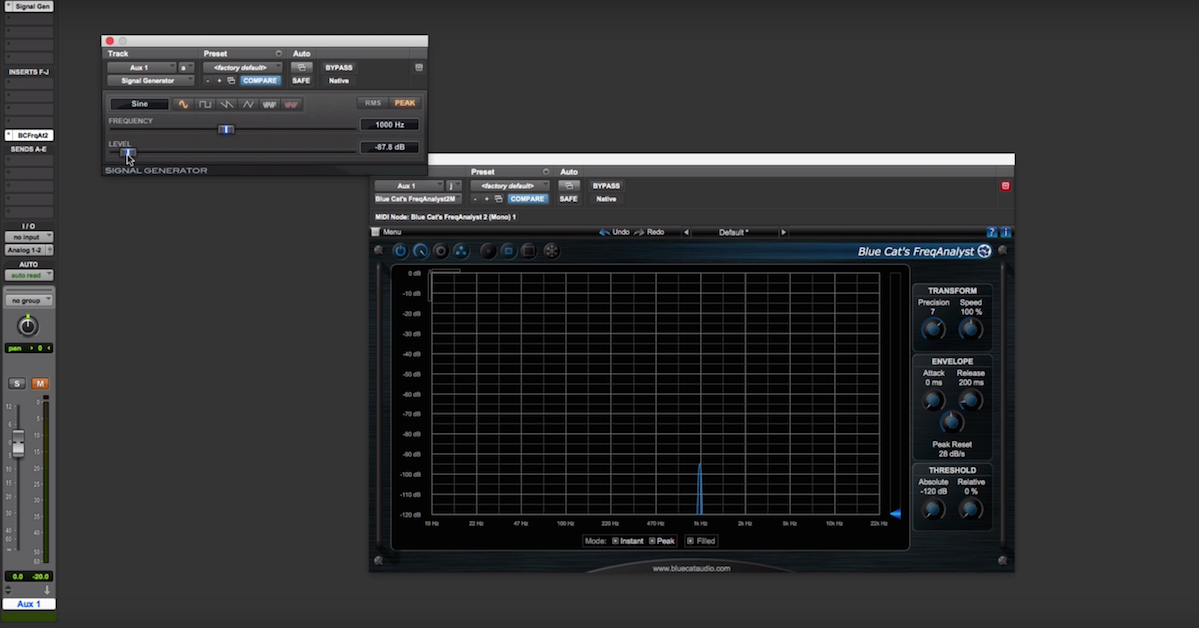

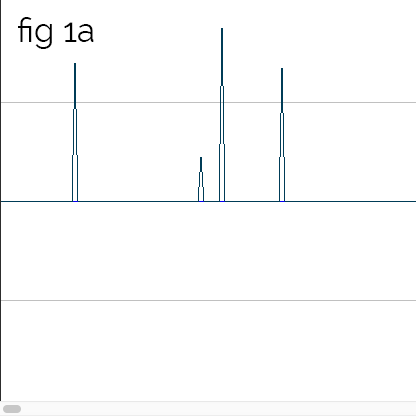

Often times when we think about sample rate, we’re only thinking of the “highest frequency” we can accurately sample. But we often don’t think about the subtlety of what’s in between. For instance, look at (fig 1a) where we have four 1 sample ticks at a sampling rate of 192 kHz. These ticks represent 1 sample of audio, or ticks that only last 192,000th of a second, which is still audible by the way.

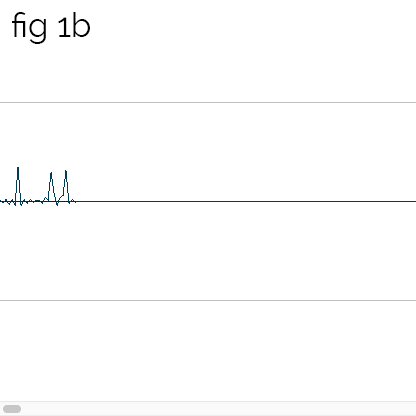

When we use a sample rate converter to resample these same ticks to 44.1 kHz (fig 1b), not only is the frequency information different, but so is the amplitude. So, it’s not only frequency, we’re missing out on dynamic information, too. This happens because of interpolation.

2. Bit Depth

Bit depth is best thought of as a container for the amplitude of our audio.

It’s also arguably not as important in the average home studio with a heavy rock or EDM production. But, if you are listening and recording at proper levels, it can have a large impact during the production process. There’s no sense in cramming the last 4 to 8 bits with all of your audio data, when we have at least 12 to 60 more to work with. With proper gain staging and monitoring levels, these subtleties are much more apparent.

3. Digital to Analog Conversion (DAC)

With both sampling rate and bit depth, the DAC transforms our bits back into a buttery smooth form of analog audio. It’s an interpolated form based on those sampled points. (That is, it fills in the blanks, or guesstimates.) But it’s inarguably based upon the information that was captured. Frequencies outside of the range of hearing also play an important role in the electronics that feed our reproduction equipment. So, it’s important to take that into consideration as well.

Most decent audio equipment is tested up to ranges outside our human hearing and those frequencies do have an impact on the behavior of the electronics in the equipment. Ultimately, we’re trying to digitally capture the analog signal that represents our audio wave. Why spend $3,000 on a preamp if you can’t squeeze every penny out?

It remains true that quad sample rates like 176.4 kHz, 192 kHz and higher may induce sampling errors and other inconsistencies. But in the process of downsampling or decimation later on, we’ll be interpolating and recalculating those errors anyways. The point is to capture the information to begin with. And again, this is arguable to some with regards to its audible effect, but it’s still factual.

4. The Audio File’s Bit Depth

The audio file’s bit depth is often misunderstood and misinterpreted. The audio file that’s on our computer, the same one that is created by our DAW, is simply a container for the information that the ADC already created. So, the data already exists in complete form before it gets into the computer. That’s the key thing to take from this.

When we select an audio file bit depth to record at in the DAW, we’re selecting the size of the container or “bucket” that we want that information to go into. Most ADC’s will be capturing your at audio 24 bit regardless of the selection you make in the DAW.

So what happens when I select a 32-bit or 64-bit float file? Your audio is still 24 bits until it is further processed. In most cases it’ll pass through a plugin effect at 32-bit float, or even a 32-bit mixbus. (Some have 64-bit float.) But with the topic of capturing audio, you aren’t changing anything, or making it sound better by simply putting it into a 32-bit or 64-bit float container. It’s the same information, with just a bunch of 0’s tagged on, waiting for something to do.

So why is a 32-bit or 64-bit float file container good? With a 24-bit file, we have a finite number of (in this case it’s 24) decimal places to capture information between 0 and 1 that our ADC delivers. In a float file, the decimal place can move or “float” to represent different values. Not only that, but we also have an extra 8 bits of resolution or headroom, that wasn’t there before. This allows us to do some pretty impressive things in terms of processing and computing.

We can essentially give our audio more resolution that it originally had, simply by processing it and interpolating new points in the dynamic spectrum. We can also dynamically and non-destructively alter our audio as long as it remains in the digital realm. We can even prevent further clipping of the captured audio. That’s why you hear it’s so important to keep the same bit depth or higher, throughout the entire production process.

So yeah, if you get 16-bit files from your friend to mix down, work at 24-bit, or better yet 32-bit float. You aren’t making it any worse, only better. Whether you are producing subtle classical recordings, or mixing a new breed of square wave EDM, bit depth is just as important at representing your dynamics, even if perceivably there aren’t any.

5. Bit Reduction

This brings us to the important topic of bit reduction. Ultimately, we have to take this audio that is dynamically captured, and make it smaller. So there needs to be yet-again, more interpolation. How do you take 32 bits of information, that you spent so long critically mixing and listening to, and cram it into a 16-bit space? Dithering.

The process of dithering these days doesn’t seem to hold much importance, at least in my small world, and it is often not taken into consideration because of the type of material that’s being produced. With digital audio, the resulting amplitude of an audio signal is a direct representation of its bit depth.

Most popular music now is produced in a way that’s unique, blending distorted tones passing through devices intended to distort them even further (at least in pop music, that is). But it’s part of the style. This distortion creates harmonics, or divisions of frequency, which might be another important argument about why sample rate perhaps should be held in higher regard.

But with loud 16-bit audio, where there isn’t much dynamic movement, and only 16 bits to represent it, we’re only using the last few bits of information to represent our audio. The “sound” of dithering comes when our audio starts reaching the noise floor, but we use that noise floor as a point of comparison for dynamic levels. So make your silence count just as much as your audible information.

6. Downsampling

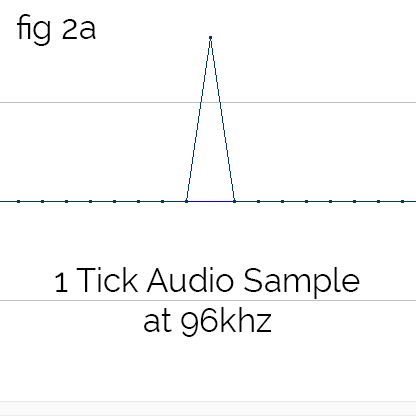

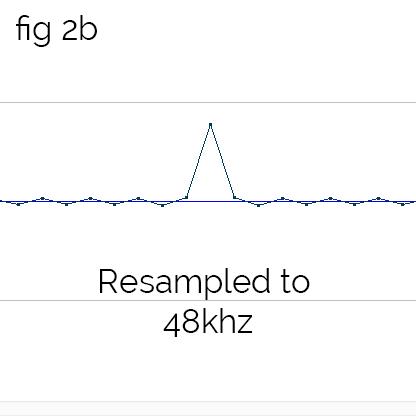

The topic of choosing a sample rate, and downsampling is also sometimes an exercise in sanity. Here are the facts: 44.1 kHz, 88.2 kHz, and 176.4 kHz are sample rates for audio mediums. Think CDs. If your audio ends up on a CD, that’s what these are for. The sample rates of 48 kHz, 96 kHz, and 192 kHz are for video mediums like DVD’s, blu-rays, etc. The idea of “more is better” is inaccurate. It should be “more is different.” The math to divide 96 kHz to 48 kHz is simple, divide by 2 (compare fig 2a to 2b).

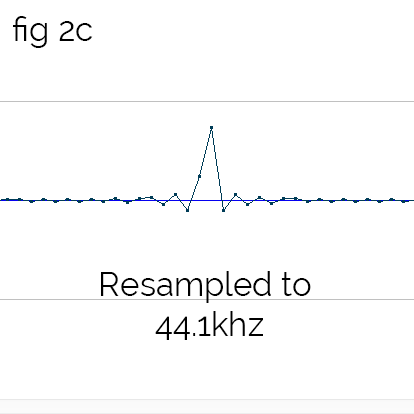

The math to divide 96 kHz to 44.1 kHz (compare fig 2a to 2c) is difficult and therefore changes your sound. It’s not necessarily bad, just different.

The most important thing to understand is that uneven decimation isn’t an accurate representation of what you capture, instead it’s some kind of interpolated form of it. This is why some people believe 48 kHz sounds different from 44.1 kHz. Uneven decimation introduces smearing into the audio (fig 1b) that wasn’t there before. Ringing will always unfortunately be there, more or less.

Another topic of quandary is “should I resample by re-recording the analog signal, or re-sample in the computer?” Both methods certainly have their pros and cons. By re-recording the analog playback of a digital recording, we are simply re-capturing an interpolated waveform which lends itself to greater analog accuracy. However, it is possible to introduce unwanted noise, so it’s something to watch out for.

If you already have an excellent signal-to-noise ratio, it isn’t necessarily introducing anything that’s noticeable in the confines of our bit depth, and would certainly be taken care of after dithering. By resampling “in the box,” we can end up with those ringing artifacts that you see in the different pictures. It’s an unavoidable scenario unfortunately as a result of math. Some algorithms do a better or “different” job than others. In the end, it truly is up to your ears. Still, it’s important to know the facts. So with that, I’ll get off my high horse and we’ll see you next time!