Asynchrony of A/V (Pt 2): Resolution of Hearing

Article Content

In my previous article, I introduced the topic of Asynchrony in A/V Media and discussed some of the industry’s standards and opinions. I also provided a small table of useful figures pertaining to the subject.

This article will focus on our ability to perceive Asynchrony of Audio and Visual Material.

The Resolution of Hearing

The first part of this is to look at how fast our ears react to sounds or, more accurately, the resolution of our hearing. If a sound has an echo, how much delay must there be between each sound for our ears to perceive that echo, rather than as a meshing of the two. This figure is generally accepted as 30ms, however it would differ from person to person and particular sound.

Essentially though, a snare hit with 10ms delay before the echo would only be perceived as a thickened or messy sound; with 30ms or more, two distinct sounds should be heard. If you look back at the figures table, 30ms is ¾ of a frame of visual media, as such you could suggest that our ears are quick enough to pick up syncing errors of this speed. The truth however is slightly different, it’s not only our ears in question; the brain is receiving both light and sound information simultaneously. The other issue is that 30ms is the boundary for an echo, in a syncing error you don’t have the original sound on time and then a repeat there is only the delayed sound to be heard.

How We Perceive Sync Errors Between Sound & Video

A test carried out by Reeves & Voelker set out to find out with pretty interesting results. They found that their test subjects could accurately detect sync errors with 5 frames of sync error. They did find that a majority could perceive errors at 2.5 frames, but not all. This is much bigger than the industry seems to suggest is necessary. In time this would relate to 200ms or 100ms.

The Test…

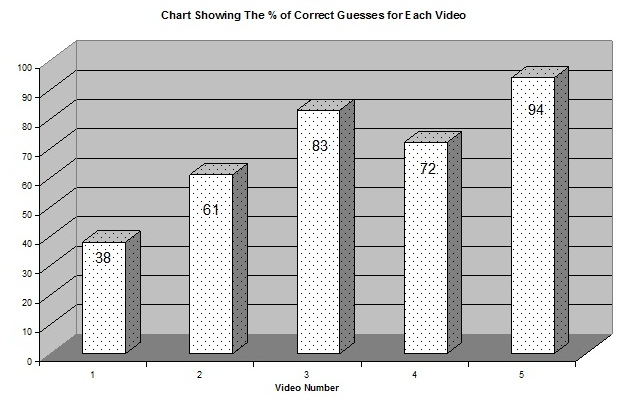

For my own research I carried out a similar test; I took a pre-made music video and split it into 5 different clips, I then moved the audio around to varying levels of error. These clips were then played to a small group who were asked to say whether they believed there was or wasn’t any sync problems. The graph below shows the percentage of correct answers given:

- Video 1 was 2 frames out and only 38% could tell this, while 38% is a low number having over a third of your audience able to see a problem would be very bad for a film/TV producer.

- Video 3 was left with the audio in time to the video so the 83% for that video was the people who correctly said there wasn’t any error.

- Videos 2 and 4 were four frames and seven frames out of sync and both show a much higher percentage. Seven frames would be considered a big problem, though it is unlikely that a competent editor/mixer would make that kind of error, however as I’ll discuss in the third part there are other ways of Asynchrony appearing.

- Video 5 is the most interesting result and the one the editors should take most note of. Video 5 had the audio placed four frames early to it video counter-part, and as the graph almost the entire test group was able to see the error.

I Believe Early Video Caused an Issue Due to The Un-realism.

In nature we are used to seeing things before hearing them due to the speed difference between sound and light. We know that a drum or cannon hit or fired in the distance will be heard a second or so later, depending on the distance. As such, it appears our brain allows a certain level of difference for this before we register it as out of sync. In real-life however, we would never hear something before seeing it because nothing travels faster than light. Our brain has allowance for audio being perceived before visual data, and so it is essential when editing video that audio is never put before its visual counterpart.

–