ABX Testing and Audio Perception

Article Content

The topic of audio perception has been pretty hot lately. From the popular news media coverage of Mastered for iTunes to the pages of TapeOp magazine, it’s not uncommon for people to be asking the question, “can you really hear the difference?” This is very good news for music and music lovers.

That might not seem like an extraordinary question for people to be asking, but the elastic reach of hardware and software marketing nonsense has devalued sensory feedback. We are routinely exposed to the most outrageous qualitative claims that have never been proven (or even suggested) with a marginally systematic listening test.

In the interest of encouraging this recent flash of sensory curiosity, let’s take a look at how anyone with a basic DAW setup might be able to go about conducting a listening test of their own.

Ground Rules

- If a claim or question includes phrases like, “sounds better” or “can hear the difference”, the most direct way to prove it (or put it to rest) is a listening test.

- “Is better” is an irrelevant claim about audio and music if it can’t be heard.

- A listening test is useless if the listener can visually verify what he or she is listening to (e.g. selections labeled MP3 and CD, or any visible waveforms). Our eyes will betray our ears.

- A listening test is useless if the listener is allowed to switch wildly back and forth between two or more examples (unless you’re testing a tool for switching wildly back and forth between two or more examples).

- The results of a test aren’t results if they can’t be repeated.

All of these types of premises are frequently debated in online communities, but we have to draw some boundaries. This article is not concerned with proving some sort of existencial benefit of technology A or B, just in hearing the difference between two things.

ABX Testing

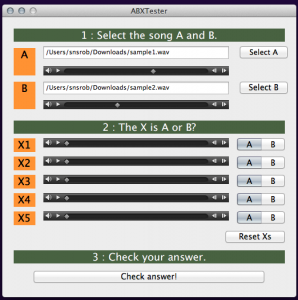

An ABX listening test takes two audio samples (A and B), and provides a method for determining whether they are distinguishable to a listener. During the ABX test the listener is asked to answer whether each of a series of playback examples (X) is sample A or B. Most test cycles will run between 5 and 10 X’s. The listener’s score is typically quantified in terms of percentage of correctly identified X’s.

Presumably if you identify X correctly close to 100% of the time, you can hear a difference. If your scores keep landing in the 50% range or vary widely across multiple tests, the suggestion is that you’re not hearing a reliable difference between the two audio examples.

There are several software ABX apps available. I use Takashi Jogataki’s (free) ABXTester all the time. I highly recommend it for Mac users. QSC made a fairly famous hardware ABX Comparator until 2004.

Application Example (a real one)

Different software companies create and sell different codecs for creating compressed audio formats like MP3 and AAC. There are a lot of reasons to prefer one over another, including user interface, cost, and brand association. To keep myself honest, I’ll typically download a demo if a new codec comes out, and ABX it against my current preference.

I’ll bounce the same audio source twice – once with each codec product set to identical digital audio precisions. Absolutely nothing else about the two bounces can be different, or the test is pointless. If I’m really being honest, I get someone else to load up the examples into the tester app so I don’t know which is which.

After one round of ABX testing (repeatedly identifying X as either A or B based on what I’m hearing) I observe my success rate at identifying A or B. I’ll usually repeat the test 3 to 5 times, maybe using different monitors (i.e. limited bandwidth versus fancy studio monitors). If the results suggest any ability to hear the difference (especially if my preference isn’t my trusted codec), I’ll usually repeat all of the above with a wide variety of playback samples from different musical genres.

This process isn’t objective or blind enough to qualify as a truly scientific test, but it is goes a long way to eliminate a lot of self-deception and marketing fog.

Test Cautions

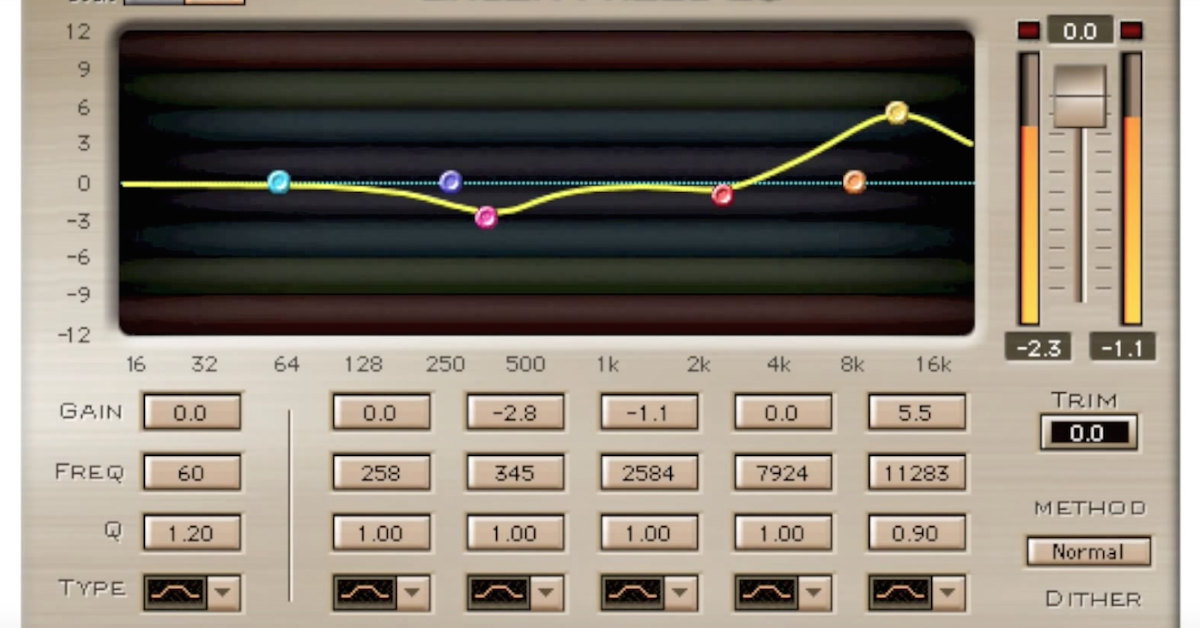

The most important step in the ABX testing process is defining a test that has a single variable. If there is more than one thing changing between A and B, you’re not really going to learn anything useful.

For example, a question like, “does mic pre A sound different than mic pre B,” is complicated. First, you have to consider the wide range of variables between two successive performance examples. Eliminating those with a mic splitter (or a playback example), you would need to consider the gain staging of the two mic pres. Devise a standard for establishing ‘equal gain’ between the two signal chains (e.g. acoustic test noise metered at a reference level at the mic pre outputs).

A question like, “can I hear the difference between a 96kHz digital recording and one sampled at 44.1kHz,” would minimally require you to:

-

- Have an acoustic or analog test signal source (a digitally derived source would be irrelevant)

- With an acoustic source you would need to have two identical converters feeding two different DAW setups with nothing but sample rate different between them

- Bounce both examples with the same digital audio precisions in order to be able to conduct the ABX test, re-defining the question as, “can I hear the difference between a 96kHz digital recording and one sampled at 44.1kHz once they’re both bounced as 44.1kHz?”

Obviously the simpler the question, the simpler the test. This should begin to highlight some of the most positive results of doing ABX testing on your own. After getting used to thinking through the variables that affect our perception, marketing claims will begin to inspire the question, “how would you test that?” The answer will either inspire some new exploration of your own, or instantly expose the silliness that often lies just under the surface of pro audio marketing.

The Challenge

ABX testing is just one way of attempting to determine unbiased answers to questions of audio perception. Other methods like null testing might be better for particular scenarios – as long as the test is well-conceived. There are some popular examples of tremendously silly null testing on YouTube, but you’ll be smart enough to consider a single variable at a time.

Share your ideas in the comments below, and we can hash over some ways to zero in on an interesting test or two.