Audio Phase 101: Timing Difference or Polarity?

Article Content

What Do We Mean?

One of the most confusing topics in audio is phase. I think part of what makes it confusing is that people use it in reference to more than one issue.

What always seems to make things clear for me: figure out precisely which aspect is the concern then focus on the part that matters. It is such an issue that I avoid using the word “phase” to prevent confusion.

There are really two things people mean when they say phase: polarity or timing difference.

Hopefully differentiating these can help you decide if you should push that Invert button or move a microphone when things sound weird.

Polarity

You’ll see a button on some mic preamps and other audio gear labeled “Phase,” “Phase Reverse,” “Phase Invert,” etc. This is really polarity.

Engineers will talk about XLR connections being pin 2 hot versus pin 3 hot. This is also polarity.

If you have two mic elements as close together as possible and combining them drops the overall level significantly, someone may describe that as having one mic out of phase with the other, but really this is polarity.

Sound is vibrating air. Molecules are pushed then pulled, then pushed, then pulled and so on. Those air vibrations are the force that moves a microphone element. When the air pushes against the element, there should be a rise above zero in the waveform. When air pulls the element there should be a drop below zero.

This pushing or pulling is polarity. If you reverse the polarity, a push against the mic element will cause a drop below zero instead of a rise above. It will also cause a rise above zero for a pull of the mic element instead of a drop below. Now some argue that you can hear a difference on a single channel between Push = Rise vs. Push = Drop. I’m not going to argue that one way or the other. The point is: nothing moved earlier or later in time. We simply inverted the push/pull relationship between air pressure and its electrical/sampled representation.

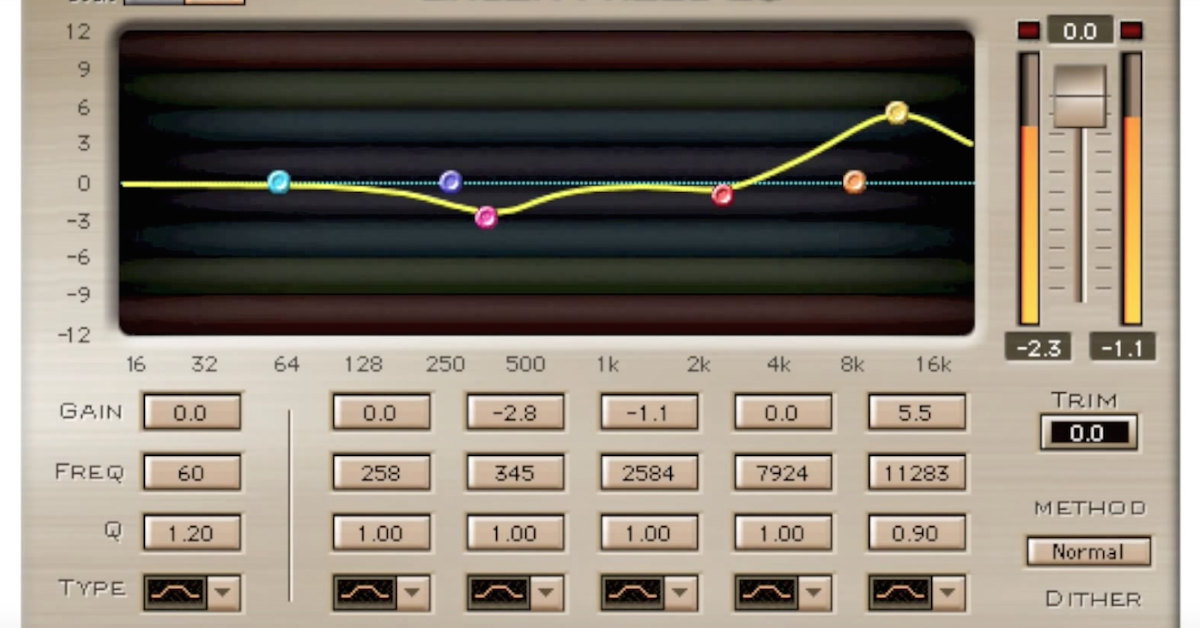

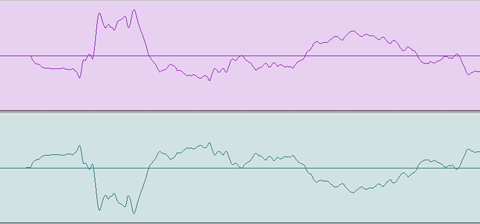

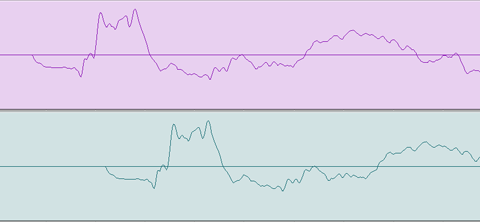

Let’s examine an ideal case of polarity as the issue: combine two identical signals (in volume, spectrum and time) and the volume will double. If you reverse the Polarity of only one of those signals (as shown above) and combine them, they will perfectly cancel.

Timing Difference

People will warn when you use more than one mic on a source, be careful not to create phase problems. When the sound of the snare arrives sooner in the snare spot mic than the overheads, this can more accurately be described a timing difference.

Digital signal processing can cause latency, which is sometimes described as sounding “phasey”. You know: latency, as in late, as in timing difference.

A timing difference may cause comb filtering. It may not. So even if you’ve figured out that you’re dealing with the issue of timing difference, not polarity, then you need to investigate comb filtering. Most experienced sound professionals know the sound of obvious comb filtering when they hear it. Other times it may be subtle, making your audio sound less than desirable but not necessarily “phasey”.

Conditions that Create Comb Filtering

When it comes to recording music with microphones, comb filtering occurs under some pretty specific circumstances. If we want to reduce the possibility of comb filtering, we need to know what causes it and what doesn’t so that we can accurately focus on prevention.

Broadband

In my experience, most musical instruments are broadband. By contrast, when you use a tone generator to create a 1k Hz sine wave, that is a single frequency, not a broadband musical signal. Phase is the issue for a specific frequency, like a 1k Hz tone. But comb filters occur for broadband signals.

Anything that is more complicated than a sine wave signal will be less an issue of phase and more an issue of comb filtering, especially instruments that are highly atonal (like a snare drum), or that vary in pitch over time (like the way a plucked string changes pitch).

Duration

Comb filtering is more noticeable when the broadband signal has significant duration. White or pink noise are extreme examples of broadband duration (they continue infinitely, or “steady state”) and will be the most likely to have audible comb filtering.

The more percussive (shorter) something is the less likely you will notice comb filtering. In extreme cases a transient may be so short that it has decayed beyond audibility by the time the delayed version ever begins.

Volume Difference

If snare drum is in microphone A and also in microphone B, and there are a few milliseconds of delay between them, they will tend to comb filter if you combine them. But if the volume of the snare is different in the mics by 9 dB or more, the comb filtering will not tend to be audible.

There may be other sounds common in mic A and B, but what limits the audibility of comb filtering for the snare is the volume difference of the snare in those mics. If you can achieve snare separation of 9dB or more, you can effectively eliminate comb filtering for the snare.

Delay

Another limit is the amount of delay. Most comb filtering occurs when the delay is under about 30 milliseconds (ms), depending on the 3 previous factors. Once you get beyond 30 ms, the delays begin to be heard as discrete echoes.

Since sound travels at roughly 1 ms per foot, comb filters will not tend to be an issue with mics that are 30 feet or more apart. Beyond 30 feet you might get some really wacky echoes instead though.

Interaction

Directional microphones can help manage a volume difference of 9 dB or more. For example, a cardioid microphone is theoretically 6 dB lower at 90 degrees off axis. But not all mikes live up to the theory. The off axis response of a microphone is worth your attention when you want to prevent comb filtering.

If you had two mics relatively close to each other and you simply mixed one 9 dB or lower than the other, that could also eliminate audible comb filtering. Of course the opposite is possible… that you didn’t hear comb filters during tracking but once you mixed things and got spaced mics within 9 dB of each other, then it became audible. Add dynamic volume changes (compression, volume automation) and you can get comb filtering some times but not others. It can be a tricky thing to manage.

Now if your microphone channels never combine, then you won’t ever get comb filtering from those two different signals. Someone may hard pan mic A left and mic B right thinking: they don’t combine (different channels), so they won’t comb filter. But because there are so many different ways that stereo can collapse to mono, it is a good idea to consider what your stereo sounds like in mono, especially if timing differences could comb filter and make your mix sound awful.

This is why a spaced pair of drum overheads should be checked in mono — to make sure the timing differences don’t cause audible comb filtering when combined. If they do, I believe the most appropriate solution is to reposition the mikes (distance and/or angle) for greater separation… 9 dB or more. Or change the mikes (different kind and/or different pattern) to increase separation. Or position the mikes coincident to remove all timing difference.

Further Reading

See also About Comb Filtering, Phase Shift and Polarity Reversal from Moulton Laboratories.

Most of what I know about comb filters I learned from F. Alton Everest’s The Master Handbook of Acoustics (Amazon). For the reader who wants to dig further in Comb Filtering, both in theory and practice, Everest’s writing is the best I’ve found.

—

For more, check out this video tutorial from Eric Tarr.