How to Use ChatGPT to Improve Your Music Productions

Article Content

If you’ve kept up with tech news over the past few months, you’ve probably noticed a lot of hype (and controversy) surrounding ChatGPT, which is a large language model developed by San Francisco-based research company OpenAI. As someone who is fascinated with technology, I immediately started using (abusing?) ChatGPT, mostly for nonsense tasks like asking it to “write a hypothetical scene from the Simpsons involving Moe selling toilet wine out of the Simpson home.” (Scroll to the end of the article to view.) The more time I spend using it, though, the more I believe it to be a revolutionary tool that will positively alter the course of humanity as long as it’s not overly exploited by greedy humans. So, we’ll see what happens.

Anyway, considering the relatively short amount of time ChatGPT has been publicly available, I am impressed by its ability to simplify test-based processes, its problem solving potential and its creativity. A few notes — ChatGPT as we know it today isn’t 100% accurate, and it will admit that. I prefer to enter a prompt a couple of times and compare them just to see if one particular prompt was missing something or was worded less clearly. It also relies on older and therefore more reliable data to generate its responses. The most recent training data is from September of 2021, so be aware that it may not be completely up to date. Inspired after a few days of playing around with ChatGPT, I reached out to Dan Comerchero, the founder of this site, expressing interest in writing this article, and was pleased to hear back almost immediately. After a brief email exchange, he left me with: “for now, I guess being the audio site that talks about AI is better than getting eaten by it.” With that, I’m excited to share how you might use ChatGPT to improve your music productions.

1. As a Troubleshooting Companion

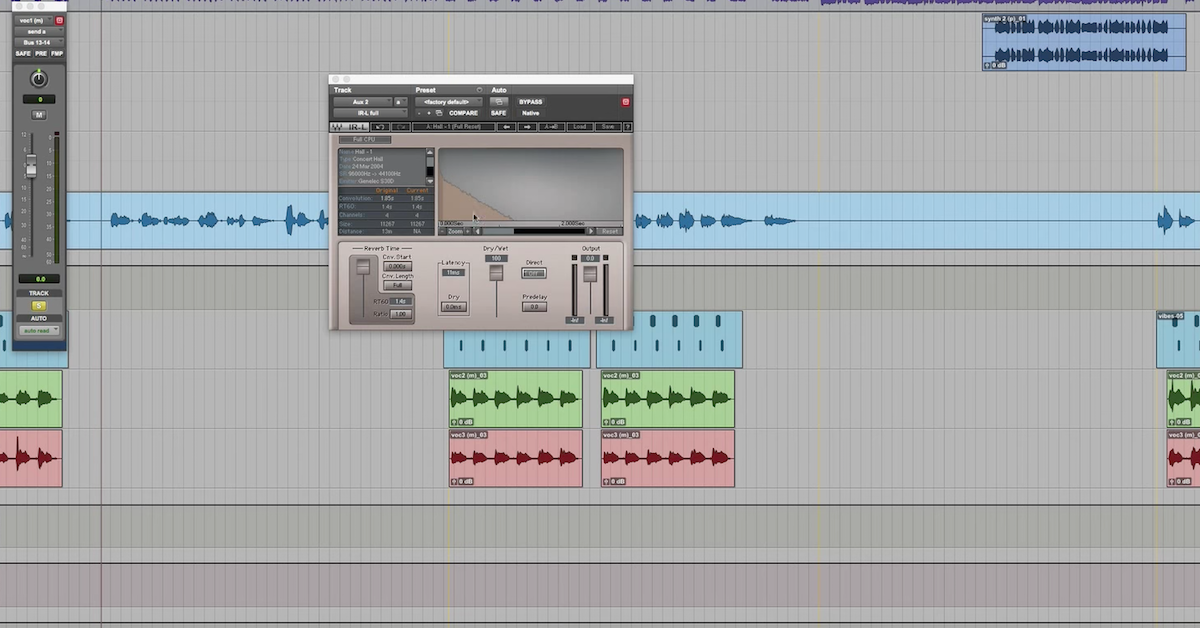

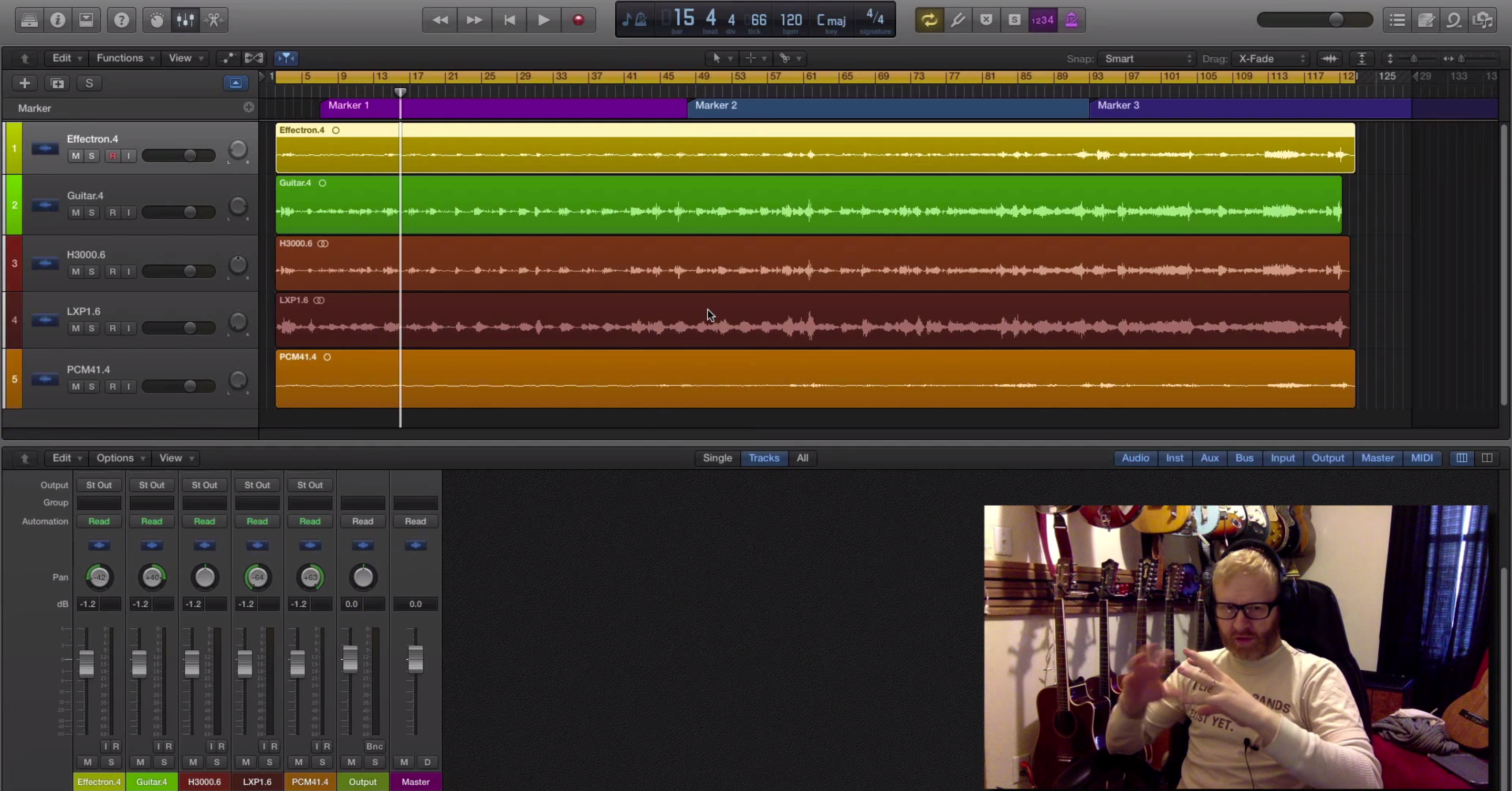

I used to scour message boards for hours searching for solutions to technical issues, and usually had to sift through a fair amount of snarky commentary and incorrect responses before finding what I needed. While ChatGPT isn’t a foolproof troubleshooting utility, it handles the simple stuff pretty well, and it communicates clearly. Check out these two exchanges involving common beginner issues involving Pro Tools and Ableton Live.

Prompt: The keyboard shortcuts in Pro Tools aren’t working, what am I doing wrong?

ChatGPT Response: “If the keyboard shortcuts in Pro Tools aren’t working, there are a few things that could be causing the issue:

- Check your keyboard settings: ensure that your keyboard settings are set to the correct language and that the shortcuts are correctly assigned.

- Verify the key commands are enabled: check that the “Keyboard Focus” button is enabled in the Edit window toolbar. This allows you to use keyboard shortcuts within the Pro Tools interface.

- Check for conflicts: ensure that the shortcut keys you are trying to use are not already assigned to another function or command in Pro Tools. If they are, you will need to reassign them to another shortcut key.

- Restart Pro Tools: sometimes, restarting Pro Tools can help solve any software glitches or bugs that may be causing keyboard shortcut issues.

- Update your software: if you’re running an outdated version of Pro Tools, it’s possible that keyboard shortcuts may not work as intended. Try updating to the latest version of Pro Tools to see if this resolves the issue.

If none of these steps work, you may need to contact Avid’s technical support for further assistance.”

Prompt: I’m getting a crackling sound when I hit play in Ableton Live, please help me.

ChatGPT Response: “If you’re experiencing a crackling sound when hitting play in Ableton Live, there are a few possible causes and solutions you can try:

- Buffer size: the buffer size determines how much audio Ableton Live processes at once. A lower buffer size will result in lower latency but may also cause crackling if the computer is not powerful enough to keep up. Try increasing the buffer size in the Audio Preferences to see if it improves the sound.

- CPU overload: if the CPU is overloaded, it can cause crackling and dropouts in the audio. To reduce the CPU usage, you can try freezing tracks that are not being used, closing other applications running in the background, or upgrading your computer’s hardware.

- Sample rate and bit depth: make sure that your project’s sample rate and bit depth match your audio interface’s settings. Mismatching sample rates and bit depths can cause crackling and other audio issues.

- Audio interface: ensure that your audio interface is functioning correctly and has the latest drivers installed. You can try resetting the audio interface or unplugging and plugging it back in.

- Plugins: some plugins may cause crackling due to their CPU usage. Try disabling or removing any plugins that may be causing issues.

- Audio files: if you’re using samples or audio files in your project, they may be the cause of the crackling. Check that the files are not corrupted and try converting them to a different format.

- Latency compensation: make sure that Ableton Live’s latency compensation is turned on. This ensures that tracks are synchronized correctly and can help reduce crackling.

By trying these solutions, you should be able to diagnose and fix the issue causing the crackling sound in Ableton Live.”

In both of the aforementioned instances, ChatGPT identified the usual culprit almost immediately.

2. As a Sound Design Shaman

ChatGPT sources its information from an unfathomably vast amount of books, articles and websites. I asked ChatGPT if it sourced The Pro Audio Files when creating it’s database of knowledge and it said “it’s possible.” Because of this, I find it to be an admirable resource when trying to design sounds that are similar to those heard on popular recordings that have been researched and written about. Check out these questions I asked it about sound design:

Prompt: How can I create a synth sound similar to “Jump” by Van Halen using Logic Pro?

ChatGPT Response: “To create a synth sound similar to “Jump” by Van Halen in Logic Pro, you can try the following steps:

- Open Logic Pro and create a new software instrument track.

- Choose the ES2 synthesizer from the list of available instruments.

- In the ES2 synthesizer, select the “Analog” waveform from Oscillator 1.

- Set the Octave of Oscillator 1 to 4.

- Increase the Attack and Release times to create a smooth envelope for the sound.

- Turn on the “Sawtooth” waveform for Oscillator 2, and detune it slightly from Oscillator 1.

- Set the Octave of Oscillator 2 to 5.

- Increase the Cutoff and Resonance parameters of the filter to create a brighter, more resonant sound.

- Add some chorus and reverb effects to the sound to give it more depth and space.

Once you’ve created the basic sound, you can experiment with different settings and effects to fine-tune the sound and make it sound more like the “Jump” synth. You may also want to consider adding some MIDI automation to create a more dynamic sound that evolves over time. Remember, creating the perfect synth sound can take some experimentation and tweaking, so don’t be afraid to play around with different settings and effects until you find the sound that you’re looking for.”

Prompt: I want my vocals to sound exactly like “Money Machine” by 100 Gecs. How do I achieve that if I’m using Logic Pro?

ChatGPT Response: “To achieve vocals similar to those in “Money Machine” by 100 Gecs in Logic Pro, you can follow these steps:

- Start by recording your vocals in Logic Pro. Make sure your microphone is of good quality and set up your recording environment to minimize background noise.

- Once you have your vocals recorded, you can use Logic Pro’s built-in EQ and compressor to shape the sound of your voice. Listen to the “Money Machine” vocals and try to replicate the EQ settings and compression levels to achieve a similar sound.

- Use pitch correction to make your vocals sound more in tune. You can use Logic Pro’s Flex Pitch or Melodyne to adjust the pitch of individual notes in your vocal performance. Again, listen to the “Money Machine” vocals and try to replicate the pitch correction used in the song.

- Experiment with vocal effects such as distortion, delay, and reverb to add texture and depth to your vocals. Use Logic Pro’s built-in effects or third-party plugins to achieve the desired effect.

- Layer your vocals to create a fuller sound. You can record multiple takes of your vocals and pan them left and right to create a stereo effect. You can also add harmonies and ad-libs to make your vocals more interesting.

- Finally, use automation to adjust the volume and effects of your vocals throughout the song. Listen to “Money Machine” and notice how the vocals are treated differently in different parts of the song.

Remember that achieving a sound like “Money Machine” requires a combination of vocal technique, production skills, and creativity. Don’t be afraid to experiment and try new things to create a unique sound that suits your music.”

Again, both are pretty impressive responses that would get even a novice user pretty close to the sound they’re after. If said novice user ran into any technical issues along the way, they could just go back to Item one in this article.

3. As a Songwriting Partner and Empathetic Life Coach

I used this next prompt because I am genuinely having difficulty finishing a song I’m writing, with the following as a test to see how it would approach implementing a key change at the end of the song. ChatGPT has an expansive understanding of music theory, and while I don’t recommend it as a substitution for your own organic creativity, the suggestions it offers are generally aesthetically pleasing and theoretically sound. On top of that, if you’re specific about how you’re feeling about a particular topic, it at least comes across as empathetic.

Prompt: I’m feeling like I have hit a creative wall with a song I am producing. The music and lyrics for the verses are complete, but I’m stuck on the chorus. The chords in the verses are A minor, G major, C major and F major, played in a repeating pattern. Can you suggest some ideas for a chorus? Also, how can I be less hard on myself?

ChatGPT Response: “When it comes to coming up with a chorus, one idea is to try playing around with the melody and structure of the verses. You can also try experimenting with different chord progressions, rhythms and harmonies to create a new and catchy chorus that complements the verses. Here are a few tips to help you come up with a chorus for your song:

- Focus on the main message or theme of your song. Your chorus should reflect the overall sentiment of the song and capture the listener’s attention.

- Try playing around with different chord progressions. For example, you could try using the chords E minor, D major, C major, and G major for the chorus.

- Experiment with different melodies and rhythms. Try humming or singing different melodies over the verse chords and see what feels natural.

- Keep it simple. A catchy chorus doesn’t have to be overly complicated. Sometimes, the simplest melodies and lyrics can be the most effective.

As for being less hard on yourself, it’s important to remember that creativity is a process, and it’s normal to hit roadblocks along the way. Don’t be too hard on yourself if you’re feeling stuck. Take a break, listen to some music that inspires you, and come back to it with fresh ears. Sometimes, stepping away from a project for a little while can give you a new perspective and help you overcome creative blocks.”

Prompt: I want to put a key change at the end of a song, which is in the key of G Major. Can you offer two suggestions?

ChatGPT Response: “Sure, here are two possible key change options to consider for your song in G Major:

- Key change to A Major: One possible key change for your song could be to move up a whole step to A Major. This would give you a new set of chords to work with, including A Major, B minor, C# minor, D Major, E Major, F# minor, and G# diminished. This key change can create a sense of lift and excitement towards the end of the song, as it moves to a brighter and more uplifting tonality.

- Key change to E Major: Another option could be to modulate to the relative major key of E Major. This would give you a new set of chords to work with, including E Major, F# minor, G# minor, A Major, B Major, C# minor, and D# diminished. This key change can create a sense of resolution and finality towards the end of the song, as it moves to a tonality that is closely related to the original key of G Major. (What happened here? E major is not relative to G major. Sometimes ChatGPT gets it wrong.)

Ultimately, the key change you choose will depend on the specific mood and emotion you want to convey in your song, as well as the musical ideas you want to explore. It’s always a good idea to experiment with different options and see what feels right for your particular song.”

4. As A Plugin Designer and Developer

Inspired by the concept that you can use ChatGPT to generate Reaper scripts that make working within that particular DAW more efficient, I decided to test if it could help me generate instruments and effects within Max for Live. Check out the beneath prompt and subsequent response, which was a valiant effort on the part of ChatGPT.

Prompt: Create a Max for Live patch that is a delay-like effect that gets gradually more distorted with each repeat.

ChatGPT Response: “As an AI language model, I don’t have the ability to create Max for Live patches directly, but I can guide you through the process of creating one that fits your needs. Here are the steps to create a Max for Live patch that is a delay-like effect that gets gradually more distorted with each repeat:

- Open Max for Live in Ableton Live, and create a new patch.

- Add a delay effect to the patch by dragging the “Delay” object from the Max for Live Devices Browser to the patch.

- Connect the audio input of the delay to the audio input of the patch, and the audio output of the delay to the audio output of the patch.

- Add a “Wave Shaper” object to the patch by dragging it from the Max for Live Devices Browser.

- Connect the output of the delay effect to the input of the wave shaper object.

- Adjust the settings of the wave shaper object to introduce distortion to the delayed signal. You can do this by adjusting the “Drive” and “Mix” parameters.

- Add a “Line” object to the patch by dragging it from the Max for Live Devices Browser.

- Connect the output of the wave shaper object to the input of the line object.

- Adjust the settings of the line object to gradually increase the distortion over time. You can do this by adjusting the “Duration” and “End Value” parameters.

- Play with the parameters until you get the desired effect.

- Save the patch as a preset so you can easily use it in your productions.

You can now use this patch as a delay effect that gradually gets more distorted with each repeat.”

I’m not switching to Reaper, please stop trying to get me to switch to Reaper.

This response is pretty good, but not as straightforward as some of the responses to the other questions. As someone who has a basic understanding of Max For Live, but is by no means an expert, it took me a fair amount of playing around and doing research outside of ChatGPT to get my effect up and running. I believe in a future update it will be more powerful for this utility, and I’m truly looking forward to it. Check out this next prompt in which I ask for ChatGPT to generate a unique plugin effect.

Prompt: Provide me an idea for a unique effect plugin that transforms audio in wild and expressive ways that isn’t currently available for any DAW.

ChatGPT Response: “One idea could be a plugin that applies a “molecular distortion” effect to audio, inspired by the behavior of molecules in a heated gas. The plugin could take an audio signal and apply random, chaotic distortions to the signal, simulating the way that molecules in a heated gas move around in random directions and collide with each other. The plugin could have parameters to control the intensity and frequency of the distortions, as well as the amount of randomness and chaos in the effect. It could also include a filter section to allow users to shape the frequency content of the distorted audio. Another idea could be a “quantum entanglement” effect that applies a type of non-local correlation to the audio signal, similar to the way that particles can become entangled in quantum physics. The plugin could take two audio signals as inputs and apply a mathematical transformation that creates a type of “quantum entanglement” between the two signals. The result would be a wild and unpredictable transformation of the audio signals, with elements of both signals intermingling in unexpected ways. The plugin could have parameters to control the degree of entanglement and the frequency range of the effect, allowing users to create everything from subtle blends to extreme and unpredictable transformations. These are just a couple of ideas for unique audio effect plugins that could transform audio in wild and expressive ways that aren’t currently available for any DAW. The possibilities are endless, and with the right programming and design, it’s possible to create truly innovative and inspiring audio effects that push the boundaries of what’s possible with digital audio technology.”

Imagine the possibilities when AI can not only envision such an effect, but perhaps write the code for it — or at the very least, more clearly explain how to create it yourself. It’s important to note, as of the time of this article being created, it is already possible to connect ChatGPT to Max for Live to create music within Ableton Live, even if the results are very primitive-sounding.

5. As a Tool You Use When You Desire, but Not Always

One of my concerns about this technology is that it uses the sum of a great deal of human knowledge that existed before and therefore without ChatGPT, so there is a danger in human reliance on this technology. Otherwise, how long until we get a feedback loop of ChatGPT suggesting humans to try something that humans generated using ChatGPT, and not with their own original thought and creativity? I’ll admit that after a few hours of experimenting with different prompts, I started to feel a bit overwhelmed at the possibilities — sort of the same feeling as when you spend an excessive amount of time clicking through synth plugin presents, or tweaking guitar pedal settings, instead of actually making music with the tools in front of you. I am seeing a backlash against this kind of technology, and I am not surprised. After all, we are the same society that tried to ban synthesizers in the 1980s. But I believe the best response when you feel afraid of or threatened by something is to learn more about it. I recommend that everyone, regardless of profession, political affiliation or general outlook on humanity should spend some time using ChatGPT, because it isn’t going away anytime soon.

*Credit to my friend Jason Cummings for this brilliant scenario.

*Credit to my friend Jason Cummings for this brilliant scenario.